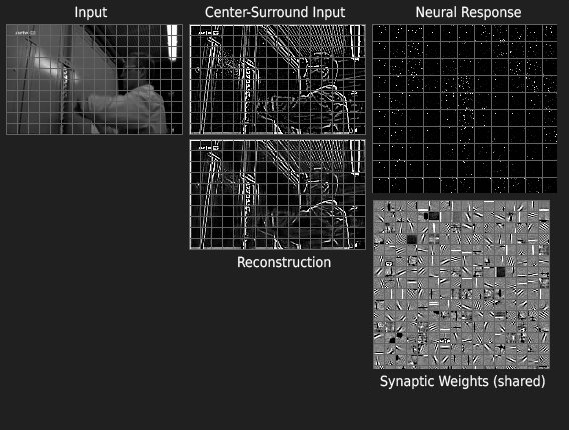

My current online feature abstraction seems to work fine now. After feeding the system a 30 minute video once it abstracts features (or “components”) of the input by changing each virtual neurons synapses (right plot in image). This is done in a way which yield a sparse and quasi-binary neural response to the input (“sparse” to decorrelate the mixed signals and “quasi-binary” to be tolerant to noise).

The reconstruction shows that all essential information is preserved and can be reconstructed from the sparse neural response.

« A reductionist view on the structure and purpose of emotions in learning systems SparseAutoEncoder algorithm. »