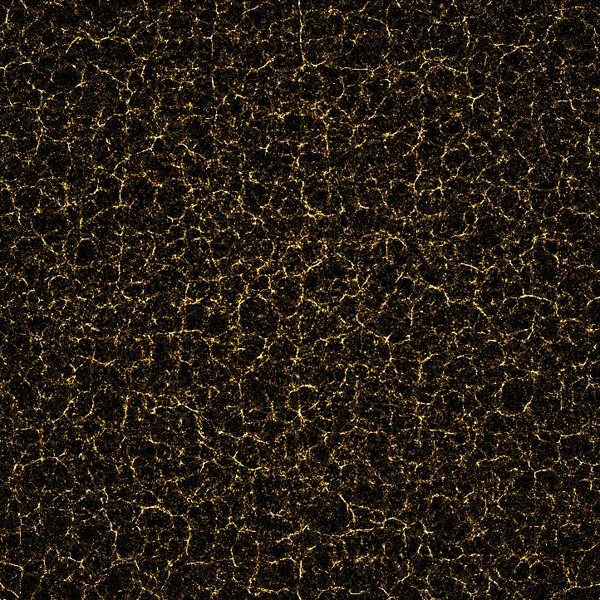

This was an attempt to simulate the structure-forming process in the universe.

3 million particles were initially placed at random positions within a sphere of space so they appear as a shapeless, homogenous fog.

Now every particle interacts with all others by gravity. Just by that they would implode into a single dense region. To avoid this a “vacuum-force” is applied which pushes each particle outwards in proportion to its location from the center. This counter-acts the collapse caused by the gravitational field and instead the particles converge into this sponge-like pattern. Where filaments connect into dense regions would be the location of star-clusters (and within those you would see galaxies), but for each “star-cluster” there are only a few particles left (around 40), so you cannot see more detail than that with “just” 3 million particles.

The pattern actually matches quite well to serious super-computer calculations done by astrophysicist.

I did the simulation in 3D as well, but basically the same pattern emerges – just 3D (but its not so nicely visualizable and many more particles would be needed).